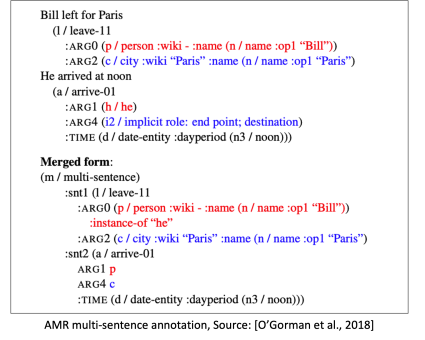

Abstract Meaning Representation (AMR) annotation was developed by LDC, SDL/Language Weaver, Inc., the University of Colorado's Computational Language and Educational Research group and the Information Sciences Institute at the University of Southern California. It is a semantic representation language that captures "who is doing what to whom" in a sentence. Each sentence is paired with a graph that represents its whole-sentence meaning in a tree-structure. AMR utilizes PropBank frames, non-core semantic roles, within-sentence coreference, named entity annotation, modality, negation, questions, quantities, and so on to represent the semantic structure of a sentence largely independent of its syntax.

LDC’s Catalog contains three cumulative English AMR publications: Release 1.0 (LDC2014T12), Release 2.0 (LDC2017T10), and Release 3.0 (LDC2020T02). The combined result in AMR 3.0 is a semantic treebank of roughly 59,255 English natural language sentences from broadcast conversations, newswire, weblogs, web discussion forums, fiction and web text and includes multi-sentence annotations.

LDC has also published Chinese Abstract Meaning Representation 1.0 (LDC2019T07) and 2.0 (LDC2021T13) developed by Brandeis University and Nanjing Normal University. These corpora contain AMR annotations for approximately 20,000 sentences from Chinese Treebank 8.0 (LDC2013T21). Chinese AMR follows the basic principles developed for English, making adaptations where necessary to accommodate Chinese phenomena.

Abstract Meaning Representation 2.0 - Four Translations (LDC2020T07), developed by the University of Edinburgh, School of Informatics, consists of Spanish, German, Italian and Chinese Mandarin translations of a subset of sentences from AMR 2.0.

Visit LDC’s Catalog for more details about these publications.